I get this question a lot: how do I get Google to notice me? You may have even followed a tutorial for indexing your site with Google Webmaster Tools and even worked your butt off for a few good backlinks. But why aren’t you coming up in searches?

Well, one issue I often see is that people forget about robots.txt. You can have all of the right meta data, a perfectly constructed site, and your Google Analytics in place, but if you don’t specifically tell Google how to crawl your site, there’s a good chance the Google bot won’t bother with you. At least not until your website gets more traffic.

So how do we fix this little problem? And what in the world is a robots.txt file anyway?

Lost Robots

There are a lot of pages and parts to a complete website, and you don’t necessarily want Google crawling and indexing all of it. If there is no robots.txt file present in your site’s root folder, often Google won’t even bother, because wirthout directions, it might index everything from plugin function categories to shop labels, image paths, and everything in between. You don’t want this. As far as Google is concerned, when it looks at your site with ranking in mind, it wants to get good info to your readers and customers as quickly and efficiently as possible. If your site is a mass of thousands of indexed paths that don’t provide value, Google will look at your site and stick its nose up at you.

There are other reasons for wanting to get a robots.txt file going on your site, the most practical of which is simply that it gives Google the “OK” to crawl your site, and what not to crawl.

But how do you generate one?

Time for Some SEO Plugin Magic

If you’re running WordPress, I highly suggest the All in One SEO plugin. Many people swear by Yoast, however that plugin adds a lot of extraneous code, and often slows websites down. Ultimately, use whichever you are comfortable with, but my money is on All in One SEO. Once you download it and have it installed, you might want to also head over to your Google Webmaster Tools to make sure you have your website submitted there.

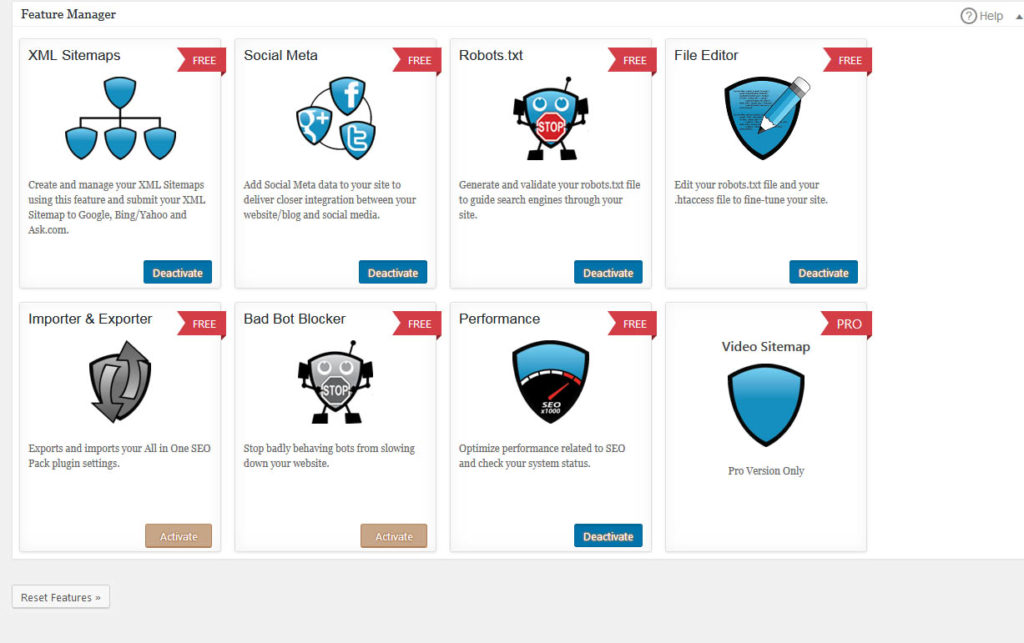

You should hop over to the Feature Manager, and activate everything you see here that’s activated, as it is all free, and all useful. Specifically for our purposes here, you will want the File Editor and Robots.txt features activated.

There are two ways to go about ensuring that your website has a robots.txt. One is to simply activate the Robots.txt feature, which generates a file if you don’t already have one. You can also add and subtract rules on the tab in the sidebar that appears for Robots.txt once you activate the feature. This can be useful, but I prefer to actually do things somewhat in reverse.

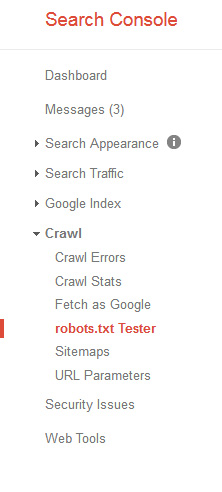

In Google Webmaster Tools, you have the ability to generate a robots.txt file right there on the spot. So head on over to the Google Search Console and look on the sidebar for the Crawl section. There is a submenu item for robots.txt which we need.

Here, you can manually write your rules, and then serve the file to Google immediately. What rules you place is up to you, but here are the ones I use:

User-agent: *

Disallow: /wp-admin/

Disallow: /categories/

Disallow: /cgi-bin/

Disallow: /author/

Disallow: /backup/

Disallow: /archives/

Disallow: /trackback/

Disallow: /feed/

Disallow: /wp-includes/

Disallow: /go/

Allow: /wp-admin/admin-ajax.php

This pretty much ensures that only quality content will be crawled by Google. Feel free to deviate from this, however. Keep the archives, backup, wp-admin, and wp-includes as Disallow, though. There’s no reason to crawl any of that.

So after you place this information in Google Webmaster Tools, you can confirm it for Google by placing the same information right onto your site with All in One SEO. Just access the File Editor feature, click on the Robots.txt tab if it’s not already there, and just paste the rules in the space provided, then save. You can then confirm your file back on the Search Console, and all will be right with the world.

Foundation of SEO

There are many factors that go into how Google ranks you, but you don’t want to start off “behind the line” so to speak. To ensure your website has a fair chance at ranking in the search results, you need to go through with the tedious steps of generating a sitemap, making sure you have a robots.txt, and so on. This is the foundation of your SEO, everything else comes afterwards. Make sure you take full advantage of all of the tools in the Search Console, and that if you are getting flagged for any errors, you take care of them. Note, not all “errors” that Google alerts you with in the Search Console may impact your search rankings, but it’s good practice to make sure your site is being crawled correctly and is throwing no errors. Will you get penalized if you don’t fix it? It’s hard to say, but everything goes through Google, and if they tell you there’s something on your site they don’t like, it’s probably a good idea to listen.

Have any questions about getting your robots.txt up and running? Just leave a comment!